Rethinking Quantization-Aware Training: Why Your QAT Length is Probably Wrong

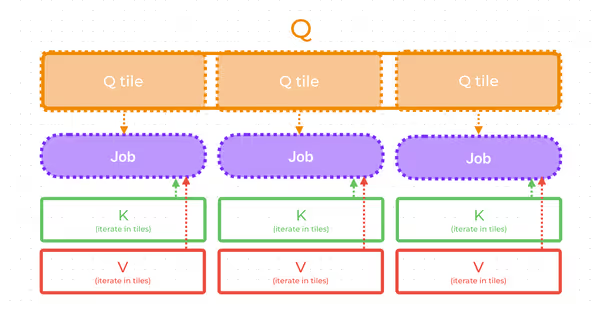

Training quantized neural networks involves a fundamental trade-off: how should you divide your compute budget between full-precision pretraining and quantization-aware training?